Parallel computing

From CFD-Wiki

(→Distributed Memory Multicomputer) |

(→Measuring Parallel Performance) |

||

| Line 32: | Line 32: | ||

==Measuring Parallel Performance== | ==Measuring Parallel Performance== | ||

| + | There are various methods that are used to measure the performance of a certain parallel program. No single method is usually preferred over another since each of them, as will be seen later on, reflects certain properties of the parallel code. | ||

| + | |||

=== Speedup === | === Speedup === | ||

| + | In the simplest of terms, the most obvious benefit of using a parallel computer is the reduction in the running time of the code. Therefore, a straightforward measure of the parallel performance would be the ratio of the execution time on a single processor (the sequential version) to that on a multicomputer. This ratio is defined as the speedup factor and is given as <br> | ||

| + | <math>S(n)=\frac\mbox{Execution time using one processor}{\mbox{Execution time using N processors}}=\frac{t_s}{t_n}</math> <br> | ||

| + | where <math>t_s</math> is the execution time on a single processor and <math>t_s</math> is the execution time on a multicomputer. | ||

| + | |||

| + | S(n) therefore describes the scalability of the system as the number of processors is increased. The ideal speedup is n when using n processors, i.e. when the computations can be divided into equal duration processes with each process running on one processor (with no communication overhead). Ironically, this is called ''embarrassingly parallel computing''! | ||

| + | |||

| + | In some cases, superlinear speedup (S(n)>n) may be encountered. Usually this is caused by either using a suboptimal sequential algorithm or some unique specification of the hardware architecture that favors the parallel computation. For example, one common reason for superlinear speedup is the extra memory in the multiprocessor system. | ||

| + | |||

=== Efficiency === | === Efficiency === | ||

| + | |||

=== Cost === | === Cost === | ||

| + | |||

== Message Passing == | == Message Passing == | ||

=== Peer to Peer Communication === | === Peer to Peer Communication === | ||

Revision as of 20:41, 4 December 2005

Contents |

Introduction

Ever heard of "Divide and Conquer"? Ever heard of "Together we stand, divided we fall"? This is the whole idea of parallel computing. A complicated CFD problem involving combustion, heat transfer, turbulence, and a complex geometry needs to be tackled. The way to tackle it is to divide it and then conquer it. The computers unite their efforts to stand up to the challenge!

Parallel computing is defined as the simultaneous use of more than one processor to execute a program. This formal definition holds a lot of intricacies inside. For instance, given a program, one cannot expect to run this program on a 1000 processors without any change to the original code. The program has to have instructions to guide it to run in parallel. Since the work is shared or distributed amongst "different" processors, data has to be exchanged now and then. This data exchange takes place using different methods depending on the type of parallel computer used. For example, using a network of PCs, a certain protocol has to be defined (or installed) to allow the data flow between PCs. The sections below describe some of the details involved.

Types of Parallel Computers

There are two fundamental types of parallel computers

- A single computer with multiple internal processors, known as a Shared Memory Multiprocessor.

- A set of computers interconnected through a network, known as a Distributed Memory Multicomputer.

Each of these can be referred to as a Parallel Computer. In this section we briefly discuss the architecture of the above systems.

A conventional computer consists of a processor and a memory readily accessible by any instruction the processor is executing. The shared memory multiprocessor is a natural extension of the single processor where multiple processors are connected to multiple memory modules such that each memory location has a single address space throughout the system. This means that any processor can readily have access to any memory location without any need for copying data from one memory to another.

Programming a shared memory multiprocessor is attractive for programmers because of the convenience offered by data sharing. However, care must taken when altering values at a given memory location since cached copies of such variables have also to be updated for any processor using that data. Furthermore, simultaneous access to memory locations has to be controlled carefully.

The major disadvantages of the shared memory multiprocessor are summarized in the following:

- Difficult to implement hardware able to achieve fast access to all shared memory locations

- High cost: design and manufacturing complexities

- Short life: upgrade is limited

Distributed Memory Multicomputer

The distributed memory multicomputer or message passing multicomputer consists of connecting independent computers via an interconnection network as shown in the figure below. Inter-processor communication is achieved through sending messages explicitly from each computer to another using a message passing library such as MPI (Message Passing Interface). In such a setup, as each computer has its own memory address space. A processor can only access its own local memory. To access a certain value residing in a different computer, it has to be copied by sending a message to the desired processor. The message passing multicomputer will physically scale easier than a shared memory multiprocessor, i.e. it can more easily be extended by adding more computers to the network.

Programming a message passing multicomputer requires the programmers to provide explicit calls for message passing routines in their programs which is sometimes error prone. However, as the recent progress and research in parallel computing has shown, message passing does not cause any unsurpassed problem. Special mechanisms are not needed for controlling access to data since the data will be copied from one computer to another. The most compelling reason for using message passing multicomputers is in its direct applicability to existing computer networks. Of course, it is much better to use a new computer with a processor operating k times faster than each of the k processors in an old multiprocessor, especially if the new single processor costs much less than the multiprocessor. And it is therefore cheaper to buy N new processors and connect them through a network.

In a distributed memory system a Master Processor refers to any one of the computers, which actually acts as the job manager (orchestrator) that distributes jobs amongst the other computers. All the pre-processing and post-processing is done on the master processor.

A Slave Processor refers to any one of the computers on the network that is not a master.

Measuring Parallel Performance

There are various methods that are used to measure the performance of a certain parallel program. No single method is usually preferred over another since each of them, as will be seen later on, reflects certain properties of the parallel code.

Speedup

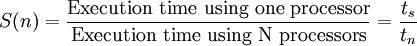

In the simplest of terms, the most obvious benefit of using a parallel computer is the reduction in the running time of the code. Therefore, a straightforward measure of the parallel performance would be the ratio of the execution time on a single processor (the sequential version) to that on a multicomputer. This ratio is defined as the speedup factor and is given as

where  is the execution time on a single processor and

is the execution time on a single processor and  is the execution time on a multicomputer.

is the execution time on a multicomputer.

S(n) therefore describes the scalability of the system as the number of processors is increased. The ideal speedup is n when using n processors, i.e. when the computations can be divided into equal duration processes with each process running on one processor (with no communication overhead). Ironically, this is called embarrassingly parallel computing!

In some cases, superlinear speedup (S(n)>n) may be encountered. Usually this is caused by either using a suboptimal sequential algorithm or some unique specification of the hardware architecture that favors the parallel computation. For example, one common reason for superlinear speedup is the extra memory in the multiprocessor system.